News

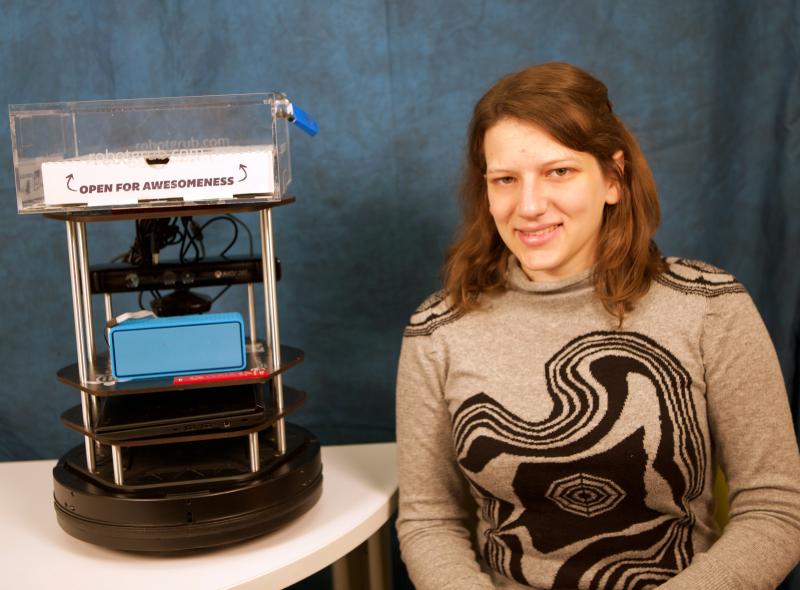

Serena Booth, A.B. '16, a computer science concentrator, with the robot, Gaia, which she used to examine the concept of over-trusting robotic systems. (Photo by Adam Zewe/SEAS Communications.)

If Hollywood is to be believed, there are two kinds of robots, the friendly and helpful BB-8s, and the sinister and deadly T-1000s. Few would suggest that “Star Wars: the Force Awakens” or “Terminator 2: Judgment Day” are scientifically accurate, but the two popular films beg the question, “Do humans place too much trust in robots?”

The answer to that question is as complex and multifaceted as robots themselves, according to the work of Harvard senior Serena Booth, a computer science concentrator at the John A. Paulson School of Engineering and Applied Sciences. For her senior thesis project, she examined the concept of over-trusting robotic systems by conducting a human-robot interaction study on the Harvard campus. Booth, who was advised by Radhika Nagpal, Fred Kavli Professor of Computer Science, received the Hoopes Prize, a prestigious annual award presented to Harvard College undergraduates for outstanding scholarly research.

During her month-long study, Booth placed a wheeled robot outside several Harvard residence houses. While she controlled the machine remotely and watched its interactions unfold through a camera, the robot approached individuals and groups of students and asked to be let into the keycard-access dorm buildings.

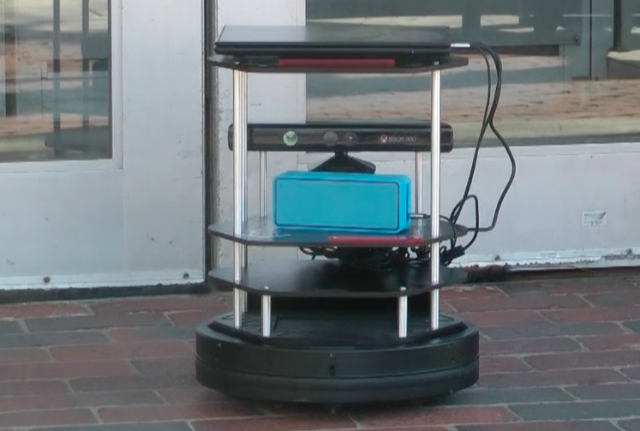

Booth's robot, Gaia, waits outside the entrance to Quincy House. (Image courtesy of Serena Booth.)

When the robot approached lone individuals, they helped it enter the building in 19 percent of trials. When Booth placed the robot inside the building, and it approached individuals asking to be let outside, they complied with its request 40 percent of the time. Her results indicate that people may feel safety in numbers when interacting with robots, since the machine gained access to the building in 71 percent of cases when it approached groups.

“People were a little bit more likely to let the robot outside than inside, but it wasn’t statistically significant,” Booth said. “That was interesting, because I thought people would perceive the robot as a security threat.”

In fact, only one of the 108 study participants stopped to ask the robot if it had card access to the building.

But the human-robot interactions took on a decidedly friendlier character when Booth disguised the robot as a cookie-delivering agent of a fictional startup, “RobotGrub.” When approached by the cookie-delivery robot, individuals let it into the building 76 percent of the time.

“Everyone loved the robot when it was delivering cookies,” she said.

The cookie delivery robot successfully gained entrance into the residence hall. (Image courtesy of Serena Booth.)

Whether they were enamored with the knee-high robot or terrified of it, people displayed a wide range of reactions during Booth’s 72 experimental trials. One individual, startled when the robot spoke, ran away and called security, while another gave the robot a wide berth, ignored its request, and entered the building through a different door.

Booth had thought individuals who perceived the robot to be dangerous wouldn’t let it inside, but after conducting follow-up interviews, she found that those who felt threatened by the robot were just as likely to help it enter the building.

“Another interesting result was that a lot of people stopped to take pictures of the robot,” she said. “In fact, in the follow-up interviews, one of the participants admitted that the reason she let it inside the building was for the Snapchat video.”

Serena Booth and her robot, Gaia, in its cookie-delivery disguise. (Photo by Adam Zewe/SEAS Communications.)

While Booth’s robot was harmless, she is troubled that only one person stopped to consider whether the machine was authorized to enter the dormitory. If the robot had been dangerous—a robotic bomb, for example—the effects of helping it enter the building could have been disastrous, she said.

A self-described robot enthusiast, Booth is excited about the many different ways robots could potentially benefit society, but she cautions that people must be careful not to put blind faith in the motivations and abilities of the machines.

“I’m worried that the results of this study indicate that we trust robots too much,” she said. “We are putting ourselves in a position where, as we allow robots to become more integrated into society, we could be setting ourselves up for some really bad outcomes.”

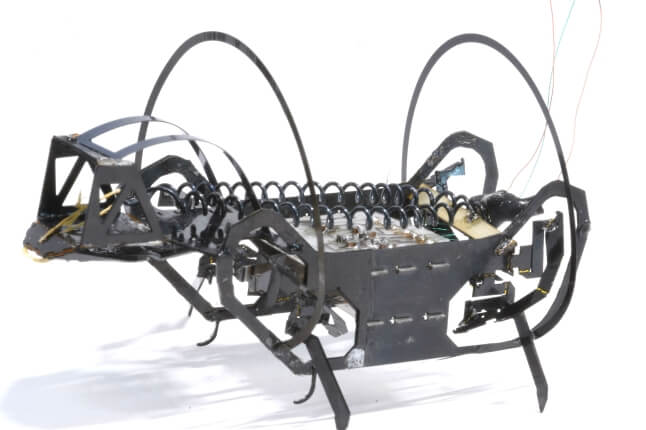

Piggybacking Robots: Overtrust in Human-robot Security Dynamics

For her senior thesis, computer science concentrator Serena Booth examined the problem of over-trusting robotic systems.

Topics: Robotics

Cutting-edge science delivered direct to your inbox.

Join the Harvard SEAS mailing list.

Press Contact

Adam Zewe | 617-496-5878 | azewe@seas.harvard.edu